Econometrics

| Part of a series on |

| Economics |

|---|

|

| |

Econometrics is an application of statistical methods to economic data in order to give empirical content to economic relationships.[1] More precisely, it is "the quantitative analysis of actual economic phenomena based on the concurrent development of theory and observation, related by appropriate methods of inference."[2] An introductory economics textbook describes econometrics as allowing economists "to sift through mountains of data to extract simple relationships."[3] Jan Tinbergen is one of the two founding fathers of econometrics.[4][5][6] The other, Ragnar Frisch, also coined the term in the sense in which it is used today.[7]

A basic tool for econometrics is the multiple linear regression model.[8] Econometric theory uses statistical theory and mathematical statistics to evaluate and develop econometric methods.[9][10] Econometricians try to find estimators that have desirable statistical properties including unbiasedness, efficiency, and consistency. Applied econometrics uses theoretical econometrics and real-world data for assessing economic theories, developing econometric models, analysing economic history, and forecasting.

History

[edit]Some of the forerunners include Gregory King, Francis Ysidro Edgeworth, Vilfredo Pareto, and Sir William Petty's Political Arithmetick.[11] Early pioneering works in econometrics include Henry Ludwell Moore's Synthetic Economics.[11]

Basic models: linear regression

[edit]A basic tool for econometrics is the multiple linear regression model.[8] In modern econometrics, other statistical tools are frequently used, but linear regression is still the most frequently used starting point for an analysis.[8] Estimating a linear regression on two variables can be visualized as fitting a line through data points representing paired values of the independent and dependent variables.

For example, consider Okun's law, which relates GDP growth to the unemployment rate. This relationship is represented in a linear regression where the change in unemployment rate () is a function of an intercept (), a given value of GDP growth multiplied by a slope coefficient and an error term, :

The unknown parameters and can be estimated. Here is estimated to be 0.83 and is estimated to be -1.77. This means that if GDP growth increased by one percentage point, the unemployment rate would be predicted to drop by 1.77 * 1 points, other things held constant. The model could then be tested for statistical significance as to whether an increase in GDP growth is associated with a decrease in the unemployment, as hypothesized. If the estimate of were not significantly different from 0, the test would fail to find evidence that changes in the growth rate and unemployment rate were related. The variance in a prediction of the dependent variable (unemployment) as a function of the independent variable (GDP growth) is given in polynomial least squares.

Theory

[edit]Econometric theory uses statistical theory and mathematical statistics to evaluate and develop econometric methods.[9][10] Econometricians try to find estimators that have desirable statistical properties including unbiasedness, efficiency, and consistency. An estimator is unbiased if its expected value is the true value of the parameter; it is consistent if it converges to the true value as the sample size gets larger, and it is efficient if the estimator has lower standard error than other unbiased estimators for a given sample size. Ordinary least squares (OLS) is often used for estimation since it provides the BLUE or "best linear unbiased estimator" (where "best" means most efficient, unbiased estimator) given the Gauss-Markov assumptions. When these assumptions are violated or other statistical properties are desired, other estimation techniques such as maximum likelihood estimation, generalized method of moments, or generalized least squares are used. Estimators that incorporate prior beliefs are advocated by those who favour Bayesian statistics over traditional, classical or "frequentist" approaches.

Methods

[edit]Applied econometrics uses theoretical econometrics and real-world data for assessing economic theories, developing econometric models, analysing economic history, and forecasting.[12]

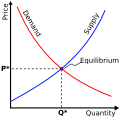

Econometrics uses standard statistical models to study economic questions, but most often these are based on observational data, rather than data from controlled experiments.[13] In this, the design of observational studies in econometrics is similar to the design of studies in other observational disciplines, such as astronomy, epidemiology, sociology and political science. Analysis of data from an observational study is guided by the study protocol, although exploratory data analysis may be useful for generating new hypotheses.[14] Economics often analyses systems of equations and inequalities, such as supply and demand hypothesized to be in equilibrium. Consequently, the field of econometrics has developed methods for identification and estimation of simultaneous equations models. These methods are analogous to methods used in other areas of science, such as the field of system identification in systems analysis and control theory. Such methods may allow researchers to estimate models and investigate their empirical consequences, without directly manipulating the system.

In the absence of evidence from controlled experiments, econometricians often seek illuminating natural experiments or apply quasi-experimental methods to draw credible causal inference.[15] The methods include regression discontinuity design, instrumental variables, and difference-in-differences.

Example

[edit]A simple example of a relationship in econometrics from the field of labour economics is:

This example assumes that the natural logarithm of a person's wage is a linear function of the number of years of education that person has acquired. The parameter measures the increase in the natural log of the wage attributable to one more year of education. The term is a random variable representing all other factors that may have direct influence on wage. The econometric goal is to estimate the parameters, under specific assumptions about the random variable . For example, if is uncorrelated with years of education, then the equation can be estimated with ordinary least squares.

If the researcher could randomly assign people to different levels of education, the data set thus generated would allow estimation of the effect of changes in years of education on wages. In reality, those experiments cannot be conducted. Instead, the econometrician observes the years of education of and the wages paid to people who differ along many dimensions. Given this kind of data, the estimated coefficient on years of education in the equation above reflects both the effect of education on wages and the effect of other variables on wages, if those other variables were correlated with education. For example, people born in certain places may have higher wages and higher levels of education. Unless the econometrician controls for place of birth in the above equation, the effect of birthplace on wages may be falsely attributed to the effect of education on wages.

The most obvious way to control for birthplace is to include a measure of the effect of birthplace in the equation above. Exclusion of birthplace, together with the assumption that is uncorrelated with education produces a misspecified model. Another technique is to include in the equation additional set of measured covariates which are not instrumental variables, yet render identifiable.[16] An overview of econometric methods used to study this problem were provided by Card (1999).[17]

Journals

[edit]The main journals that publish work in econometrics are:

- Econometrica, which is published by Econometric Society.[18]

- The Review of Economics and Statistics, which is over 100 years old.[19]

- The Econometrics Journal, which was established by the Royal Economic Society.[20]

- The Journal of Econometrics, which also publishes the supplement Annals of Econometrics.[21]

- Econometric Theory, which is a theoretical journal.[22]

- The Journal of Applied Econometrics, which applies econometrics to a wide various problems.[23]

- Econometric Reviews, which includes reviews on econometric books and software as well.[24]

- The Journal of Business & Economic Statistics, which is published by the American Statistical Association.[25]

Limitations and criticisms

[edit]Integrating statistics into economic theory to develop claims of causality lead to disagreements within the discipline, resulting in criticisms of econometrics. Most of these criticisms have been resolved as a result of the credibility revolution and the improved rigor of the potential outcomes framework, used today by applied economists, microeconomists and econometricians for generating results that interpret causality. While developments by econometricians began in the mid 1960s to improve statistical measures, the publication in 2009 of Mostly Harmless Econometrics by economists Joshua D. Angrist and Jörn-Steffen Pischke has summarized the improvements in econometric modeling. Structural causal modeling, which attempts to formalize the limitations of quasi-experimental methods from a causality perspective, allowing experimenters to precisely quantify the risks of quasi-experimental research, is the primary academic response to this critique.

Like other forms of statistical analysis, badly specified econometric models may show a spurious relationship where two variables are correlated but causally unrelated. In a study of the use of econometrics in major economics journals, McCloskey concluded that some economists report p-values (following the Fisherian tradition of tests of significance of point null-hypotheses) and neglect concerns of type II errors; some economists fail to report estimates of the size of effects (apart from statistical significance) and to discuss their economic importance. She also argues that some economists also fail to use economic reasoning for model selection, especially for deciding which variables to include in a regression.[26][27]

In some cases, economic variables cannot be experimentally manipulated as treatments randomly assigned to subjects.[28] In such cases, economists rely on observational studies, often using data sets with many strongly associated covariates, resulting in enormous numbers of models with similar explanatory ability but different covariates and regression estimates. Regarding the plurality of models compatible with observational data-sets, Edward Leamer urged that "professionals ... properly withhold belief until an inference can be shown to be adequately insensitive to the choice of assumptions".[28]

Difficulties in model specification

[edit]Like other forms of statistical analysis, badly specified econometric models may show a spurious correlation where two variables are correlated but causally unrelated. Economist Ronald Coase is widely reported to have said "if you torture the data long enough it will confess".[29] Deirdre McCloskey argues that in published econometric work, economists often fail to use economic reasoning for including or excluding variables, equivocate statistical significance with substantial significance, and fail to report the power of their findings.[30]

Economic variables are observed in reality, and therefore are not readily isolated for experimental testing. Edward Leamer argued tthere was no essential difference between econometric analysis and randomized trials or controlled trials, provided the use of statistical techniques reduces the specification bias, the effects of collinearity between the variables, to the same order as the uncertainty due to the sample size.[31] Today, this critique is unbinding, as advances in identification are stronger. Identification today may report the average treatment effect (ATE), the average treatment effect on the treated (ATT), or the local average treatment effect (LATE).[32] Specification bias, or selection bias can be easily removed, through advances in sampling techniques and the ability to sample much larger populations through improved communications, data storage, and randomization techniques. Secondly, collinearity can easily be controlled for, through instrumental variables. By reporting either ATT or LATE we can control for or eliminate heterogenous error, reporting only the effects on the group as defined.

Economists, when using data, may have a number of explanatory variables they want to use that are highly collinear, such that researcher bias may be important in variable selection. Leamer argues that economists can mitigate this by running statistical tests with different specified models and discarding any inferences which prove to be "fragile", concluding that "professionals ... properly withhold belief until an inference can be shown to be adequately insensitive to the choice of assumptions."[31] Today, this is known as p-hacking, and is not a failure of econometric methodology, but is instead a potential failure of a researcher who may be seeking to prove their own hypothesis.[33] P-hacking is not accepted in economics, and the requirement to disclose original data and the code to perform statistical analysis.[34] However Sala-I-Martin[35] argued, it's possible to specify two models suggesting contrary relation between two variables. The phenomenon was labeled emerging recalcitrant result phenomenon by Robert Goldfarb.[36] This is known as two-way causality, and should be discussed with respect to the underlying theory that the mechanism is attempting to capture.

Kennedy (1998, p 1-2) reports econometricians as being accused of using sledgehammers to crack open peanuts. That is they use a wide range of complex statistical techniques while turning a blind eye to data deficiencies and the many questionable assumptions required for the application of these techniques.[37] Kennedy quotes Stefan Valavanis's 1959 Econometrics textbook's critique of practice:

Econometric theory is like an exquisitely balanced French recipe, spelling out precisely with how many turns to mix the sauce, how many carats of spice to add, and for how many milliseconds to bake the mixture at exactly 474 degrees of temperature. But when the statistical cook turns to raw materials, he finds that hearts of cactus fruit are unavailable, so he substitutes chunks of cantaloupe; where the recipe calls for vermicelli he used shredded wheat; and he substitutes green garment die for curry, ping-pong balls for turtles eggs, and for Chalifougnac vintage 1883, a can of turpentine. (1959, p.83)[38]

Macroeconomic critiques

[edit]Looking primarily at macroeconomics, Lawrence Summers has criticized econometric formalism, arguing that "the empirical facts of which we are most confident and which provide the most secure basis for theory are those that require the least sophisticated statistical analysis to perceive." Summers is not critiquing the methodology itself but instead its usefulness in developing macroeconomic theory.

He looks at two well cited macroeconometric studies (Hansen & Singleton (1982, 1983), and Bernanke (1986)), and argues that while both make brilliant use of econometric methods, both papers do not speak to formal theoretical proof. Noting that in the natural sciences, "investigators rush to check out the validity of claims made by rival laboratories and then build on them," Summers points out that this rarely happen in economics, which to him is a result of the fact that "the results [of econometric studies] are rarely an important input to theory creation or the evolution of professional opinion more generally." To Summers:[39]

Successful empirical research has been characterized by attempts to gauge the strength of associations rather than to estimate structural parameters, verbal characterizations of how causal relations might operate rather than explicit mathematical models, and the skillful use of carefully chosen natural experiments rather than sophisticated statistical technique to achieve identification.

Lucas critique

[edit]Robert Lucas criticised the use of overly simplistic econometric models of the macroeconomy to predict the implications of economic policy, arguing that the structural relationships observed in historical models break down if decision makers adjust their preferences to reflect policy changes. Lucas argued that policy conclusions drawn from contemporary large-scale macroeconometric models were invalid as economic actors would change their expectations of the future and adjust their behaviour accordingly. Good macroeconometric model should incorporate microfoundations to model the effects of policy change, with equations representing economic representative agents responding to economic changes based on rational expectations of the future; implying their pattern of behaviour might be quite different if economic policy changed.

Austrian School critique

[edit]The current-day Austrian School of Economics typically rejects much of econometric modeling. The historical data used to make econometric models, they claim, represents behavior under circumstances idiosyncratic to the past; thus econometric models show correlational, not causal, relationships. Econometricians have addressed this criticism by adopting quasi-experimental methodologies. Austrian school economists remain skeptical of these corrected models, continuing in their belief that statistical methods are unsuited for the social sciences.[40]

The Austrian School holds that the counterfactual must be known for a causal relationship to be established. The changes due to the counterfactual could then be extracted from the observed changes, leaving only the changes caused by the variable. Meeting this critique is very challenging since "there is no dependable method for ascertaining the uniquely correct counterfactual" for historical data.[41] For non-historical data, the Austrian critique is met with randomized controlled trials. Randomized controlled trials must be purposefully prepared, which historical data is not.[42] The use of randomized controlled trials is becoming more common in social science research. In the United States, for example, the Education Sciences Reform Act of 2002 made funding for education research contingent on scientific validity defined in part as "experimental designs using random assignment, when feasible."[43] In answering questions of causation, parametric statistics only addresses the Austrian critique in randomized controlled trials.

If the data is not from a randomized controlled trial, econometricians meet the Austrian critique with quasi-experimental methodologies, including identifying and exploiting natural experiments. These methodologies attempt to extract the counterfactual post-hoc so that the use of the tools of parametric statistics is justified. Since parametric statistics depends on any observation following a Gaussian distribution, which is only guaranteed by the central limit theorem in a randomization methodology, the use of tools such as the confidence interval will be outside of their specification: the amount of selection bias will always be unknown.[44]

See also

[edit]References

[edit]- ^ M. Hashem Pesaran (1987). "Econometrics", The New Palgrave: A Dictionary of Economics, v. 2, p. 8 [pp. 8–22]. Reprinted in J. Eatwell et al., eds. (1990). Econometrics: The New Palgrave, p. 1 Archived 15 March 2023 at the Wayback Machine [pp. 1–34]. Abstract Archived 18 May 2012 at the Wayback Machine (2008 revision by J. Geweke, J. Horowitz, and H. P. Pesaran).

- ^ P. A. Samuelson, T. C. Koopmans, and J. R. N. Stone (1954). "Report of the Evaluative Committee for Econometrica", Econometrica 22(2), p. 142. [p p. 141-146], as described and cited in Pesaran (1987) above.

- ^ Paul A. Samuelson and William D. Nordhaus, 2004. Economics. 18th ed., McGraw-Hill, p. 5.

- ^ "1969 - Jan Tinbergen: Nobelprijs economie - Elsevierweekblad.nl". elsevierweekblad.nl. 12 October 2015. Archived from the original on 1 May 2018. Retrieved 1 May 2018.

- ^ Magnus, Jan & Mary S. Morgan (1987) The ET Interview: Professor J. Tinbergen in: 'Econometric Theory 3, 1987, 117–142.

- ^ Willlekens, Frans (2008) International Migration in Europe: Data, Models and Estimates. New Jersey. John Wiley & Sons: 117.

- ^ • H. P. Pesaran (1990), "Econometrics", Econometrics: The New Palgrave, p. 2 Archived 15 March 2023 at the Wayback Machine, citing Ragnar Frisch (1936), "A Note on the Term 'Econometrics'", Econometrica, 4(1), p. 95.

• Aris Spanos (2008), "statistics and economics", The New Palgrave Dictionary of Economics, 2nd Edition. Abstract. Archived 18 May 2012 at the Wayback Machine - ^ a b c Greene, William (2012). "Chapter 1: Econometrics". Econometric Analysis (7th ed.). Pearson Education. pp. 47–48. ISBN 9780273753568.

Ultimately, all of these will require a common set of tools, including, for example, the multiple regression model, the use of moment conditions for estimation, instrumental variables (IV) and maximum likelihood estimation. With that in mind, the organization of this book is as follows: The first half of the text develops fundamental results that are common to all the applications. The concept of multiple regression and the linear regression model in particular constitutes the underlying platform of most modeling, even if the linear model itself is not ultimately used as the empirical specification.

- ^ a b Greene, William (2012). Econometric Analysis (7th ed.). Pearson Education. pp. 34, 41–42. ISBN 9780273753568.

- ^ a b Wooldridge, Jeffrey (2012). "Chapter 1: The Nature of Econometrics and Economic Data". Introductory Econometrics: A Modern Approach (5th ed.). South-Western Cengage Learning. p. 2. ISBN 9781111531041.

- ^ a b Tintner, Gerhard (1953). "The Definition of Econometrics". Econometrica. 21 (1): 31–40. doi:10.2307/1906941. ISSN 0012-9682. JSTOR 1906941.

- ^ Clive Granger (2008). "forecasting", The New Palgrave Dictionary of Economics, 2nd Edition. Abstract. Archived 18 May 2012 at the Wayback Machine

- ^ Wooldridge, Jeffrey (2013). Introductory Econometrics, A modern approach. South-Western, Cengage learning. ISBN 978-1-111-53104-1.

- ^ Herman O. Wold (1969). "Econometrics as Pioneering in Nonexperimental Model Building", Econometrica, 37(3), pp. 369 Archived 24 August 2017 at the Wayback Machine-381.

- ^ Angrist, Joshua D.; Pischke, Jörn-Steffen (May 2010). "The Credibility Revolution in Empirical Economics: How Better Research Design is Taking the Con out of Econometrics". Journal of Economic Perspectives. 24 (2): 3–30. doi:10.1257/jep.24.2.3. hdl:1721.1/54195. ISSN 0895-3309.

- ^ Pearl, Judea (2000). Causality: Model, Reasoning, and Inference. Cambridge University Press. ISBN 978-0521773621.

- ^ Card, David (1999). "The Causal Effect of Education on Earning". In Ashenfelter, O.; Card, D. (eds.). Handbook of Labor Economics. Amsterdam: Elsevier. pp. 1801–1863. ISBN 978-0444822895.

- ^ "Home". www.econometricsociety.org. Retrieved 14 February 2024.

- ^ "The Review of Economics and Statistics". direct.mit.edu. Retrieved 14 February 2024.

- ^ "The Econometrics Journal". Wiley.com. Archived from the original on 6 October 2011. Retrieved 8 October 2013.

- ^ "Journal of Econometrics". www.scimagojr.com. Retrieved 14 February 2024.

- ^ "Home". Retrieved 14 March 2024.

- ^ "Journal of Applied Econometrics". Journal of Applied Econometrics. doi:10.1002/(ISSN)1099-1255. hdl:2027.42/34956.

- ^ Econometric Reviews Print ISSN: 0747-4938 Online ISSN: 1532-4168 https://www.tandfonline.com/action/journalInformation?journalCode=lecr20

- ^ "Journals". Default. Retrieved 14 February 2024.

- ^ McCloskey (May 1985). "The Loss Function has been mislaid: the Rhetoric of Significance Tests". American Economic Review. 75 (2).

- ^ Stephen T. Ziliak and Deirdre N. McCloskey (2004). "Size Matters: The Standard Error of Regressions in the American Economic Review", Journal of Socio-Economics, 33(5), pp. 527-46 Archived 25 June 2010 at the Wayback Machine (press +).

- ^ a b Leamer, Edward (March 1983). "Let's Take the Con out of Econometrics". American Economic Review. 73 (1): 31–43. JSTOR 1803924.

- ^ Gordon Tullock, "A Comment on Daniel Klein's 'A Plea to Economists Who Favor Liberty'", Eastern Economic Journal, Spring 2001, note 2 (Text: "As Ronald Coase says, 'if you torture the data long enough it will confess'." Note: "I have heard him say this several times. So far as I know he has never published it.")

- ^ McCloskey, D.N. (May 1985). "The Loss Function has been mislaid: the Rhetoric of Significance Tests" (PDF). American Economic Review. 75 (2): 201–205.

- ^ a b Leamer, Edward (March 1983). "Let's Take the Con out of Econometrics". American Economic Review. 73 (1): 31–43. JSTOR 1803924.

- ^ "2 Probability and Regression Review – Causal Inference

The Mixtape". mixtape.scunning.com. Retrieved 26 October 2025. - ^ Brodeur, Abel; Carrell, Scott; Figlio, David; Lusher, Lester (November 2023). "Unpacking P-hacking and Publication Bias". American Economic Review. 113 (11): 2974–3002. doi:10.1257/aer.20210795. ISSN 0002-8282.

- ^ "AEA Data and Code Policies and Guidance". www.aeaweb.org. Retrieved 26 October 2025.

- ^ Sala-i-Martin, Xavier X (November 1997). "I Just Ran Four Million Regressions". Working Paper Series. doi:10.3386/w6252.

{{cite journal}}: Cite journal requires|journal=(help) - ^ Goldfarb, Robert S. (December 1997). "Now you see it, now you don't: emerging contrary results in economics". Journal of Economic Methodology. 4 (2): 221–244. doi:10.1080/13501789700000016. ISSN 1350-178X.

- ^ Kennedy, P (1998) A Guide to Econometrics, Blackwell, 4th Edition

- ^ Valavanis, Stefan (1959) Econometrics, McGraw-Hill

- ^ Summers, Lawrence (June 1991). "The Scientific Illusion in Empirical Macroeconomics". Scandinavian Journal of Economics. 93 (2): 129–148. doi:10.2307/3440321. JSTOR 3440321.

- ^ Garrison, Roger - in The Meaning of Ludwig von Mises: Contributions is Economics, Sociology, Epistemology, and Political Philosophy, ed. Herbener, pp. 102-117. "Mises and His Methods"

- ^ DeMartino, George F. (2021). "The specter of irreparable ignorance: counterfactuals and causality in economics". Review of Evolutionary Political Economy. 2 (2): 253–276. doi:10.1007/s43253-020-00029-w. ISSN 2662-6136. PMC 7792558. PMID 38624569.

- ^ Angrist, Joshua; Pischke, Jörn-Steffen (15 December 2008). Mostly Harmless Econometrics. Princeton University Press. ISBN 978-1400829828.

- ^ Education Sciences Reform Act of 2002, Pub. L. 107–279; Approved Nov. 5, 2002; 116 Stat. 1941, As Amended Through P.L. 117–286, Enacted December 27, 2022 "https://www.govinfo.gov/content/pkg/COMPS-747/pdf/COMPS-747.pdf"

- ^ Harris, Anthony D.; McGregor, Jessina C.; Perencevich, Eli N.; Furuno, Jon P.; Zhu, Jingkun; Peterson, Dan E.; Finkelstein, Joseph (2006). "The Use and Interpretation of Quasi-Experimental Studies in Medical Informatics". Journal of the American Medical Informatics Association. 13 (1): 16–23. doi:10.1197/jamia.M1749. ISSN 1067-5027. PMC 1380192. PMID 16221933.

Further reading

[edit]- Econometric Theory book on Wikibooks

- Giovannini, Enrico Understanding Economic Statistics, OECD Publishing, 2008, ISBN 978-92-64-03312-2

- Kmenta, J. (2025). Econometrics: A failed science?. In International Encyclopedia of Statistical Science (pp. 773-776). Springer, Berlin, Heidelberg.

- Hendry, D. F. (2000). Econometrics: alchemy or science?: essays in econometric methodology. OUP Oxford.[1]

- Moosa, I. A. (2017). Econometrics as a con art: exposing the limitations and abuses of econometrics. Edward Elgar Publishing.

- Pinto, H. (2011). The role of econometrics in economic science: An essay about the monopolization of economic methodology by econometric methods. The Journal of Socio-Economics, 40(4), 436-443.

- Swann, G. P. (2006). Putting econometrics in its place: a new direction in applied economics. Edward Elgar Publishing.[2]

External links

[edit]- Journal of Financial Econometrics

- Econometric Society

- The Econometrics Journal

- Econometric Links

- Teaching Econometrics (Index by the Economics Network (UK))

- Applied Econometric Association

- The Society for Financial Econometrics Archived 17 November 2012 at the Wayback Machine

- The interview with Clive Granger – Nobel winner in 2003, about econometrics

- ^ Hansen, Bruce E. “Methodology: Alchemy or Science?” The Economic Journal, vol. 106, no. 438, 1996, pp. 1398–413. JSTOR, https://doi.org/10.2307/2235531. Accessed 22 Oct. 2025.

- ^ Adkisson, Richard V. (2008) "Putting Econometrics in its Place: A New Direction in Applied Economics." Review of Social Economy, 127-129. , Vol. 66, No. 1,